Measuring how motivation affects information quality assessment: a gamification approach

Marko Poženel, Aljaž Zrnec and Dejan Lavbič. 2022. Measuring how motivation affects information quality assessment: a gamification approach, PLOS ONE, 17(10).

Abstract

Purpose – Existing research on the measurability of information quality (IQ) has delivered poor results and demonstrated low inter-rater agreement measured by Intra-Class Correlation (ICC) in evaluating IQ dimensions. Low ICC could result in a questionable interpretation of IQ. The purpose of this paper is to analyse whether assessors’ motivation can facilitate ICC.

Methodology – To acquire the participants’ views of IQ, we designed a survey as a gamified process. Additionally, we selected Web study to reach a broader audience. We increased the validity of the research by including a diverse set of participants (i.e. individuals with different education, demographic and social backgrounds).

Findings – The study results indicate that motivation improved the ICC of IQ on average by 0.27, demonstrating an increase in measurability from poor (0.29) to moderate (0.56). The results reveal a positive correlation between motivation level and ICC, with a significant overall increase in ICC relative to previous studies. The research also identified trends in ICC for different dimensions of IQ with the best results achieved for completeness and accuracy.

Practical implications – The work has important practical implications for future IQ research and suggests valuable guidelines. The results of this study imply that considering raters’ motivation improves the measurability of IQ substantially.

Originality – Previous studies addressed ICC in IQ dimension evaluation. However, assessors’ motivation has been neglected. This study investigates the impact of assessors’ motivation on the measurability of IQ. Compared to the results in related work, the level of agreement achieved with the most motivated group of participants was superior.

Keywords

information quality; motivation; information quality assessment; inter-rater agreement; task-at-hand; gameful design

1 Introduction

Making the best possible decisions requires information of the highest quality. As the amount of information available grows, it becomes increasingly difficult to distinguish quality from questionable information (Arazy, Kopak, and Hadar 2017). The problem of poor information quality can weaken our decision processes, so we need more reliable measures and new techniques to assess the quality of information (Arazy and Kopak 2011; Ji-Chuan and Bing 2016; Fidler and Lavbič 2017). Unfortunately, such assessment can itself be very demanding (Arazy and Kopak 2011; Arazy, Kopak, and Hadar 2017; Yaari, Baruchson-Arbib, and Bar-Ilan 2011).

In general, the term information quality (IQ) represents the value of information for a given usage. However, IQ often refers to people’s subjective judgment of the goodness and usefulness of information in certain information use settings (Yaari, Baruchson-Arbib, and Bar-Ilan 2011; Michnik and Lo 2009). The literature has widely adopted a multidimensional view of IQ (West and Williamson 2009; Arazy, Kopak, and Hadar 2017) to support more effortless management of its complexity.

The measurability of IQ has gained substantial attention in recent years (Yaari, Baruchson-Arbib, and Bar-Ilan 2011; Lian et al. 2018; Zhu et al. 2020). Most research in this field has been limited to measuring the quality of structured data (e.g. data in databases where a scheme is defined in advance) (Helfert 2001; Tilly et al. 2017; Madhikermi et al. 2016). Measuring the IQ of unstructured data (e.g. Wikipedia articles) requires different approaches that include interdisciplinary components (Batini et al. 2009). The research community proposed several determinants of IQ and there is a growing concern regarding how to best identify quality information (Arazy, Kopak, and Hadar 2017). Only a few studies presented inter-rater agreement results using Interclass Correlation Coefficient (ICC) statistics, and multiple guidelines for the interpretation of ICC inter-rater agreement values exist (Landis and Koch 1977; Cicchetti 1994; Koo and Li 2016). Regardless of the ICC interpretation used, the values reported in recent studies are poor or at best moderate (Fidler and Lavbič 2017; Arazy and Kopak 2011). This demonstrates that reaching consensus among various raters is difficult when measuring IQ.

Research problems regarding efficient IQ measurement remain relatively underexplored. Previous papers studied some of the cues that affect IQ assessment on selected sources of data (Arazy and Kopak 2011; Arazy, Kopak, and Hadar 2017). However, the research community needs additional case studies to evaluate the inter-rater reliability of IQ dimensions (a single aspect of data that can be measured and improved) in various settings to help increase the external validity of the cues and factors.

Being motivated means having an incentive to do something (Ryan and Deci 2000a). Intrinsically motivated does something for its own sake, for the sheer enjoyment of a task, while extrinsically motivatied does something in order to attain some external goal or meet some externally imposed constraint (Hennessey et al. 2015). To the best of our knowledge, no previous research has investigated how motivation affects IQ assessment and whether it has a significant impact on inter-rater agreement. In this paper, we propose a new approach that improves the measurability of IQ by considering various IQ dimensions. Specifically, we study the effect of motivation on IQ measurement and inter-rater reliability. Researchers have always seen motivation as an important factor that influences learning performance (Mamaril et al. 2013; DePasque and Tricomi 2015; Tokan and Imakulata 2019). Our goal in this work is to corroborate that motivation also affects the measurability of IQ.

In related work, Arazy and Kopak (2011) studied the measurability of IQ in Wikipedia articles, and Fidler and Lavbič (2017) narrowed the object of a study to individual paragraphs. In this work, we evaluate IQ of hints that (i) correspond to selected IQ dimensions, (ii) have diversified predefined quality, and (iii) help participants in progressing through the gamified process. Specifically, we evaluate the relevance of gamified task hints targeting IQ dimensions of accuracy, objectivity, completeness, and representation. We are interested in the consistency between multiple raters assessing the same set of hints in a hands-on assignment.

This study contributes to the existing literature concerning IQ measurability and inter-rater reliability. It extends the work presented in Arazy and Kopak (2011) and Arazy, Kopak, and Hadar (2017). To support comparison, we use the categorization of IQ dimensions defined by Lee et al. (2002), previously used in similar studies (Arazy and Kopak 2011; Fidler and Lavbič 2017; Arazy, Kopak, and Hadar 2017).

The remainder of the paper is structured as follows. In section 2, we review related work and introduce the problem statement and our proposed solution. We follow this with presentation of the empirical study design and the experiment in section 3. Then we present the results and discuss the implications and limitations in section 4. Finally, in section 5, we present our conclusions, limitations and suggest directions for future work.

3 Method

3.1 Evaluation mechanics

To address the research questions, we conducted an online quantitative case study. Participation was voluntary and user consent was obtained before the start. As presented in detail in section 3.2, we included a diverse set of participants (i.e. individuals with different education, demographic and social backgrounds), which amplified the validity of our research. To reach a broader audience, we selected a Web study, and to acquire the participants’ views of IQ, we designed a survey in the form of a gamified process.

Previous studies on measuring IQ dimensions focused primarily on students (Arazy and Kopak 2011) and university librarians (Arazy, Kopak, and Hadar 2017). The main drawback of existing studies is the small group of participants. In the present study, we followed a design that would investigate smaller data sources under study but use existing data dimensions and existing estimation metrics with a much bigger set of participants as further discussed in section 3.2.

We employed a gamification principle to measure the influence of motivation on the evaluation of IQ. For our experiment, we developed a tool in the form of a Web-based gamified software tool. The overall gamified purpose of the assessment application is to save and raise a little bird to adulthood and return it to the wild (see details in section 3.3). The objective is to complete the gamified process with a minimum number of attempts to receive more points, which addresses the motivational aspect. Because of the dynamic conditions under which the participants gain points, more motivated participants collect a higher number of points for their effort. For higher user engagement in the gamified process of measuring IQ, we included the following game elements: leaderboard (visual display of social comparison), levels (player’s progressive) and points (virtual rewards against the player effort) as those elements improve the motivation and performance of participants (Mekler et al. 2017).

The research goal of the gamified process is to assess hints’ IQ that corresponds to selected IQ dimensions and help participants in progressing through the gamified process. We argue that the participants’ success in resolving the gamified task strongly correlates to the consistency of given IQ evaluations of participants in selected dimensions.

3.2 Participants

Our study had a total of 1225 participants who participated from April 2015 to March 2020. Initially, we directly targeted undergraduate University students whom we had direct access and then all potential participants by utilising mail and social media campaigns. In the process of data cleaning, we excluded participants that did not answer all 24 questions (4 game levels with 6 questions each) and spent a total time of less than 5 min (quick random selection of responses) or more than 3 h (multiple breaks while playing) to complete the gamified process.

We were then left with 1062 responses with the median time to complete all 4 game levels of 11 min 50 s. There were 30.7% female and 69.3% male, with ages ranging from 15 to 69 with a median value of 20 years old.

In general, we targeted a population that has finished high school, since they have more experience with poor IQ. A total of 40.5% of participants stated that poor IQ deeply disturbs them and 37.5% stated that they at least bother about poor IQ. We sought to increase the diversity of participants to enhance the external validity of the research; the participants were 57.6% undergraduate students and 42.4% non-students.

Although, the study required no prior knowledge to participate, we included all participants in a pre-training that provided an introduction to the IQ dimensions (completeness, accuracy, representation, and objectivity) that they had to evaluate later in the gamified process. Before performing gamified tasks, they also completed an evaluation task in which they were asked to measure these IQ dimensions in a short paragraph.

3.3 Measuring IQ dimensions

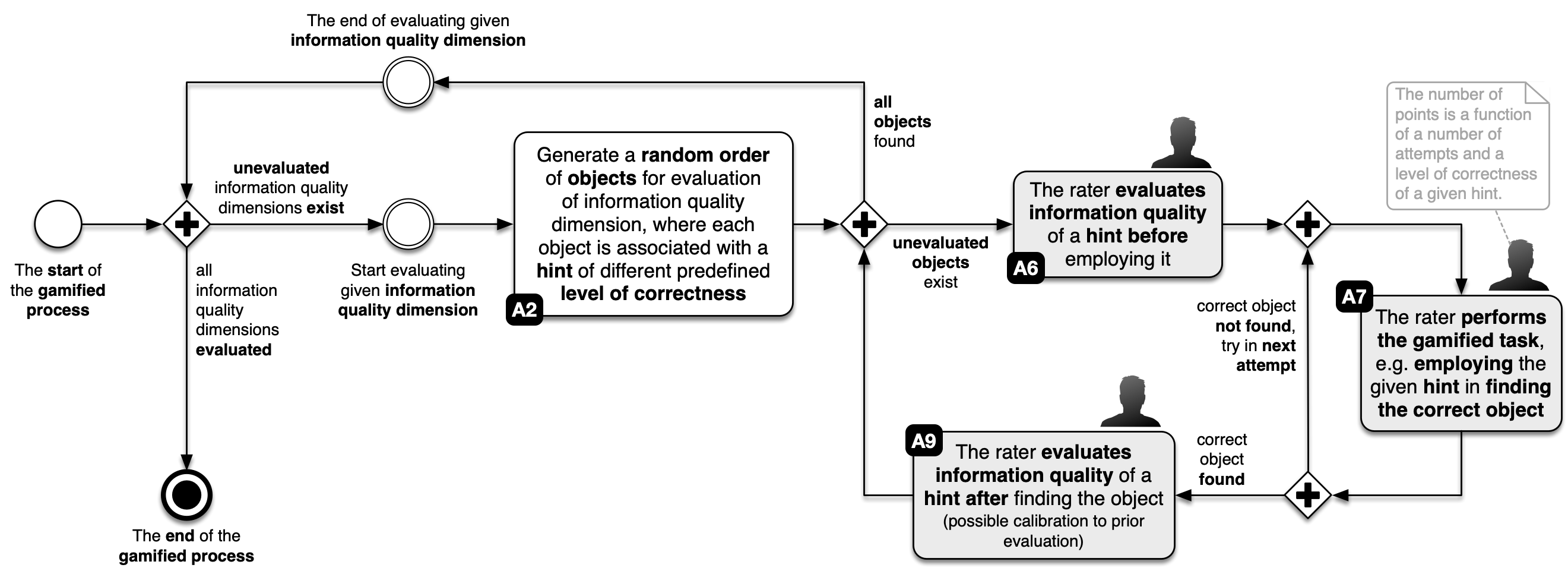

The gamified process consists of four levels. At each game level, we evaluate one of the selected IQ dimensions (completeness, accuracy, representation, and objectivity) highlighted in section 2. Figure 3.1 depicts an overview of the measurement of IQ dimensions, while Figure 3.2 shows comprehensive details. For every IQ dimension, we presented evaluating objects in a random order (see activity A2 in Figure 3.1). Each object is associated with a hint of a different predefined level of correctness (see Equation (3.5)). The rater then evaluates the IQ of a hint (for finding an object within a gamified process) before employing it (see activity A6 in Figure 3.1) to find the correct object. The number of points awarded is a function of the number of attempts and the level of correctness of a given hint (see Equation (3.6)). Once the rater finds the correct object, he evaluates again the IQ of the same hint, where a calibration to prior evaluation is possible (see activity A9 in Figure 3.1). The gamified proces ends when the rater finds all objects within a given IQ dimension and evaluates IQ dimensions.

Figure 3.1: Overview of measuring selected IQ dimensions

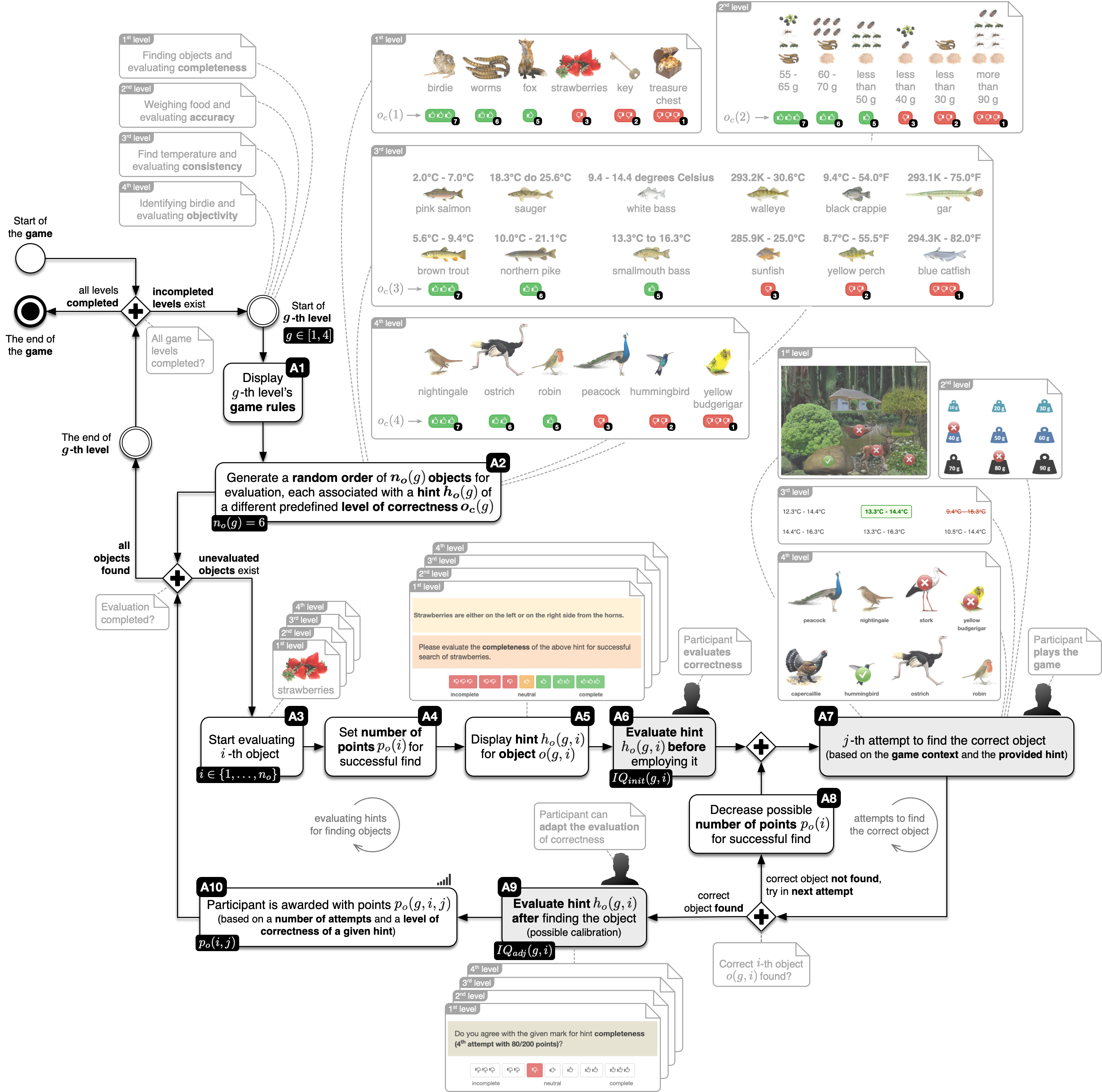

Figure 3.2 depicts that the first step in evaluating IQ dimension is displaying the game rules for the \(g\)-th level (see activity A1 in Figure 3.2), where \(g \in [1,4]\). At the start, each player receives general information about the story of the level and tasks that he must accomplish, along with detailed instructions. At all times there is a progress bar available at the top of the screen that informs the player of his status within the gamified process.

Figure 3.2: Detailed process of measuring selected IQ dimensions

To successfully finish each level of the gamified process, participants must find the correct object \(o(g)\), based on the gamified task’s context and the hint provided. The objects for each of the game’s levels \(o(g)\) are selected and displayed to the participant in a random order (see activity A2 in Figure 3.2).

The first level focuses on completeness; participants must find a hidden object from the following set

\[\begin{equation} o(1) = \{ \text{birdie}, \text{worms}, \text{fox}, \text{strawberries}, \text{key}, \text{treasure chest} \} \tag{3.1} \end{equation}\]

Presented hints are of various completeness levels, where the most complete hint provides information for unique identification of a location of a hidden object, while the least complete hint involves a great level of ambiguity (e.g. there are several possible locations of a hidden object).

The second level focuses on accuracy; participants must weigh food and select the correct weight of the following objects:

\[\begin{equation} \begin{split} o(2) = \{ \\ & \quad \{\text{worms + 2 flies + mosquito + blackberries\}}, \\ & \quad \{\text{crumbs + worms + 6 bugs}\}, \\ & \quad \{\text{crumbs + 6 flies + 2 bugs}\}, \\ & \quad \{\text{crumbs + bug + blackberries}\}, \\ & \quad \{\text{2 crumbs + worms}\}, \\ & \quad \{\text{2 crumbs + 4 mosquitos + 3 flies + 2 bugs}\} \\ \} \end{split} \tag{3.2} \end{equation}\]

Presented hints are associated with scales of various accuracy, from the most accurate with the exact measurement and the least accurate with the false range of measurement.

The third level focuses on representation; participants must find the correct temperature range for a living habitat of the following fish:

\[\begin{equation} \begin{split} o(3) = \{ \\ & \quad \{\text{brown trout and pink salmon\}}, \\ & \quad \{\text{sauger and northern pike}\}, \\ & \quad \{\text{white bass and smallmouth bass}\}, \\ & \quad \{\text{walleye and sunfish}\}, \\ & \quad \{\text{black crappie and yellow perch}\}, \\ & \quad \{\text{gar and blue catfish}\} \\ \} \end{split} \tag{3.3} \end{equation}\]

Presented hints are associated with the representation of temperature ranges that are available in various units (Celsius, Fahrenheit, and Kelvin), where the most consistent representation includes a range with only one unit of measurement, while the least consistent representation presents a mix of various units.

The fourth level focuses on objectivity; participants must indicate the correct bird

\[\begin{equation} \begin{split} o(4) = \{ \\ & \quad \text{nightingale}, \text{ostrich}, \text{robin}, \text{peacock}, \\ & \quad \text{hummingbird}, \text{yellow budgerigar} \\ \} \end{split} \tag{3.4} \end{equation}\]

Presented hints include bird’s origin, size, and color that are presented by various levels of objectivity with different people, from the most objective ornithologists to the least objective bankers, programmers, and wall painters.

There are \(\boldsymbol{n_o}(g) = \boldsymbol{6}\) objects at every level \(g\), each associated with a hint \(\boldsymbol{h_o}(g)\) of different level of correctness \(\boldsymbol{o_c}(g) = \{1, 2, 3, 5, 6, 7\}\), where \(o_c(g) = \{1, 2, 3\}\) is associated with correct hints (with value \(1\) the most correct hint) and \(o_c = \{5, 6, 7\}\) are incorrect hints (with value \(7\) the most incorrect hint). The main part of the task is to evaluate the \(i\)-th object based on the provided hint (see activity A6 in Figure 3.2).

The function of correctness \(o_c(g)\) differs for every level \(g\) and represents the selected IQ dimension being measured

\[\begin{equation} o_c \left( g \right) = \left\{\begin{array}{l @{\quad} c l} o_c = \text{completeness} & ; & \text{if } g = 1 \\ o_c = \text{accuracy} & ; & \text{if } g = 2 \\ o_c = \text{representation} & ; & \text{if } g = 3 \\ o_c = \text{objectivity} & ; & \text{if } g = 4 \end{array}\right. \tag{3.5} \end{equation}\]

At every level of the game \(g\), participants receive \(n_o(g) = 6\) objects in random order for evaluation. When searching for the correct object, participants can try and find the correct object if their previous attempt was incorrect (see activity A7 in Figure 3.2).

The points awarded for a correct answer \(\boldsymbol{p_o(i,j)}\) are decreasing linearly with the number of attempts \(\boldsymbol{j} \ge 1\) and a predefined level of correctness \(\boldsymbol{o_c}\) (the less correct the hint is, the more points are awarded if the object is correctly identified) as depicted in Equation (3.6). We based the quality of hints on a previous analysis and rules that pertain to the dimension under study.

\[\begin{equation} p_o \left( i, j \right) = \left\{\begin{array}{c @{\quad} c l} 10 \cdot (6 - j) \cdot (7 - o_c) & ; & \text{if } j < 6 \text{ and } o_c(i) \in \{1, 2, 3\} \\ 10 \cdot (6 - j) \cdot (8 - o_c) & ; & \text{if } j < 6 \text{ and } o_c(i) \in \{5, 6, 7\} \\ 0 & ; & \text{otherwise} \end{array}\right. \tag{3.6} \end{equation}\]

Each participant can make multiple attempts to find the object (see activity A7 in Figure 3.2) but is, according to Equation (3.6), motivated to find the correct answer in the minimum number of attempts. Each failed attempt reduces the number of points awarded (see activity A8 in Figure 3.2) at a given level \(g\) and consequently in the game as a whole.

When a participant at a given game level \(g\) starts evaluating the \(i\)-th object \(o(g, i)\) (see activity A3 in Figure 3.2) on a 7-point Likert scale, an associated hint \(h_o(g, i)\) with a predefined level of correctness \(o_c(g, i)\) is displayed (see activity A5 in Figure 3.2). The hint is evaluated with \(IQ_{init}(g, i)\) (see activity A6 in Figure 3.2) before the participant tries to find the correct object \(o(g, i)\) (see activity A7 in Figure 3.2) in a minimal number of attempts \(j\), because points \(p_o(g, i, j)\) are associated with the number of attempts required for success. After the correct object \(o(g, i)\) is found, the previous evaluation \(IQ_{init}(g, i)\) can be calibrated with \(IQ_{adj}(g, i)\) (see activity A9 in Figure 3.2); the participant can alter previously given scores for an IQ dimension, if desired.

The evaluation process of IQ dimensions is complete at the end of the 4-th level. At the end, the system gives the participant a score and his overall position in the rankings.

3.4 Evaluation metrics

The interclass correlation coefficient is a reliability index widely used for intra-rater and inter-rater reliability analyses. Since we measured the variation between raters measuring the same group of objects in this work, we focused on inter-rater reliability. According to the guidelines, proposed by Koo and Li (2016), we select ICC(2,1) as a measure of agreement for our inter-rater reliability study (see Figure 4.1 and Table 4.1).

The attributes of the ICC(2,1) are:

- the model is two-way random with k raters randomly selected and each hint (total of n hints) measured by the same set of k raters,

- the number of measurements is single measures and reliability is applied to a context where a single measure of a single rater is performed,

- the metric is absolute agreement, where the agreement between raters is of interest, including systematic errors of both raters and random residual errors.

ICC(2,1) is defined as follows

\[\begin{equation} \boldsymbol{ICC(2,1)} = \frac{BMS - EMS}{BMS + (k - 1) \cdot EMS + \frac{k}{n} \cdot (JMS - EMS)} \tag{3.7} \end{equation}\]

where

- \(\boldsymbol{WMS} = \frac{WSS - BSS}{n \cdot (k - 1)}\) is Within Mean Squares (from one-way ANOVA),

- \(\boldsymbol{BMS} = \frac{BSS}{n - 1}\) is Between Objects Mean Squares (from one-way ANOVA),

- \(\boldsymbol{JMS} = \frac{JSS}{k - 1}\) is Joint (between raters) Mean Squares (from two-way ANOVA),

- \(\boldsymbol{EMS} = \frac{ESS}{(n - 1) \cdot (k - 1)}\) is Error (residual) Mean Squares (from two-way ANOVA).

- \(\boldsymbol{ESS} = WSS - BSS - JSS\) is Error (residual) Sum of Square (from two-way ANOVA),

- \(\boldsymbol{JSS} = n \cdot \sum_{j = 1}^{k} \Big( \frac{1}{n} \cdot \sum_{i = 1}^n v_{ij} - m_a \Big)^2\) is Joint (between raters) Sum of Square (from two-way ANOVA),

- \(\boldsymbol{BSS} = k \cdot \sum_{i = 1}^{n} \Big( \frac{1}{k} \cdot \sum_{j = 1}^{k} v_{ij} - m_a \Big)^2\) is Between Objects Sum of Squares (from one-way ANOVA),

- \(\boldsymbol{WSS} = \sum_{i = 1}^{n} \sum_{j = 1}^{k} \Big( v_{ij} - m_a \Big)^2\) is Within Sum of Squares of all raters (from one-way ANOVA) and

- \(\boldsymbol{m_a} = \frac{1}{n \cdot k} \sum_{i = 1}^{n} \sum_{j = 1}^{k} v_{ij}\).

To measure the reliability of scale we also calculate Cronbach’s alpha ICC(3,k), which is defined as

\[\begin{equation} \boldsymbol{\alpha} = \frac{BMS - EMS}{BMS} \tag{3.8} \end{equation}\]

4 Results and discussion

4.1 Results

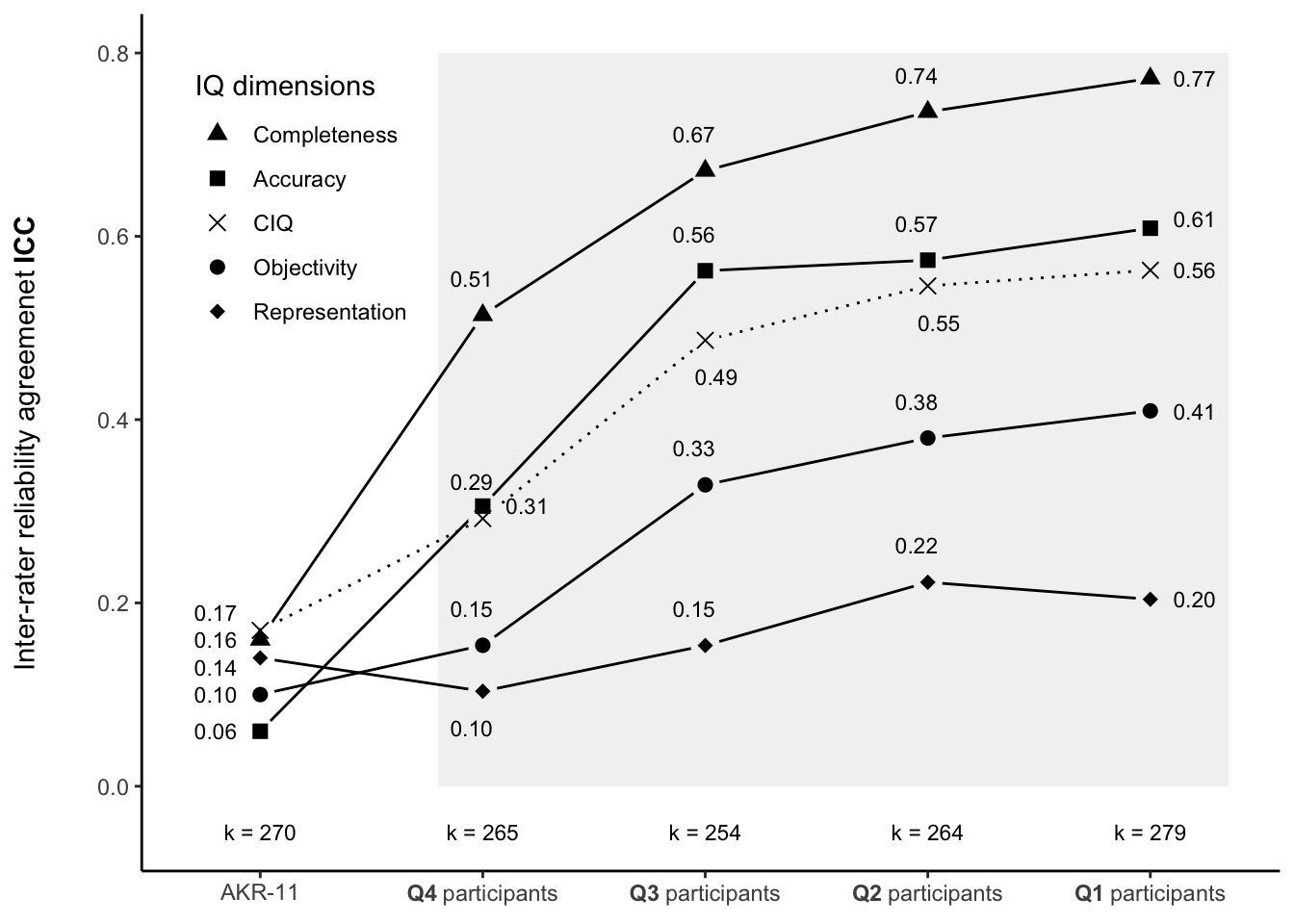

Participants in our research evaluated the IQ dimensions of hints in a gamified process, where we rewarded their effort with a score. Table 4.1, Figure 4.1, and Figure 4.2 show the results referring to both research questions. The experiment had a two-fold purpose. First, to measure the inter-rater reliability agreement as ICC(2,1) in evaluating various IQ dimensions. Second, to measure the motivation of participants in the gamified process. The involvment of the participants in a form of motivation is measured by game points, related to the performance of players further determined by the number of attempts and predefined level of correctness of a given IQ dimension. The mechanics of points calculation are defined in Equation (3.6), where each aprticipant can make multiple attempts to find the object but is motivated to find the correct answer in the minimum number of attempts. Each failed attempt reduces the number of points awarded to the player at a given level and consequently in the game as a whole. The aforementioned results ICC(2,1) and groups Q are not related in terms that ICC(2,1) focuses on agreement with other raters, while groups focus on the provided value of IQ dimension by the rater in relation to the predefined level of correctness associated with the dimension.

Table 4.1 presents detailed inter-class agreement results ICC(2,1) (denoted by ICC) including the measured reliability of scale ICC(3,k) (denoted by \(\alpha\)) for various constructs regarding different groups of participants. We divided the participating players’ scores into four groups according to the number of points scored in the gamified environment. The groups in Figure 4.1 are arranged in ascending order by quartile. Group Q4 represents those who achieved mediocre results, while Q1 represents the highest-scoring players.

There are five IQ dimension groups; four for every dimension under investigation, and CIQ, the mean value of all four dimensions.

IQ dimension | Q | n | k | BMS | WMS | JMS | EMS | ICC(2,1) | ICC(3,k) |

Completeness | Q4 | 6 | 265 | 871.10 | 3.10 | 6.36 | 2.45 | 0.51 | 1.00 |

Completeness | Q3 | 6 | 254 | 1,202.42 | 2.31 | 3.75 | 2.02 | 0.67 | 1.00 |

Completeness | Q2 | 6 | 264 | 1,357.12 | 1.84 | 3.06 | 1.60 | 0.74 | 1.00 |

Completeness | Q1 | 6 | 279 | 1,494.92 | 1.58 | 2.54 | 1.39 | 0.77 | 1.00 |

Accuracy | Q4 | 6 | 265 | 442.82 | 3.77 | 9.83 | 2.56 | 0.31 | 0.99 |

Accuracy | Q3 | 6 | 254 | 764.38 | 2.34 | 5.05 | 1.79 | 0.56 | 1.00 |

Accuracy | Q2 | 6 | 264 | 841.57 | 2.36 | 5.49 | 1.74 | 0.57 | 1.00 |

Accuracy | Q1 | 6 | 279 | 956.64 | 2.20 | 4.68 | 1.70 | 0.61 | 1.00 |

Representation | Q4 | 6 | 265 | 137.10 | 4.39 | 12.89 | 2.69 | 0.10 | 0.98 |

Representation | Q3 | 6 | 254 | 161.69 | 3.46 | 9.37 | 2.28 | 0.15 | 0.99 |

Representation | Q2 | 6 | 264 | 229.97 | 3.02 | 8.49 | 1.92 | 0.22 | 0.99 |

Representation | Q1 | 6 | 279 | 227.29 | 3.15 | 10.07 | 1.77 | 0.20 | 0.99 |

Objectivity | Q4 | 6 | 265 | 165.93 | 3.40 | 8.66 | 2.34 | 0.15 | 0.99 |

Objectivity | Q3 | 6 | 254 | 385.43 | 3.08 | 6.67 | 2.36 | 0.33 | 0.99 |

Objectivity | Q2 | 6 | 264 | 453.62 | 2.79 | 6.04 | 2.14 | 0.38 | 1.00 |

Objectivity | Q1 | 6 | 279 | 557.23 | 2.87 | 6.63 | 2.12 | 0.41 | 1.00 |

CIQ | Q4 | 24 | 265 | 403.51 | 3.66 | 20.39 | 2.94 | 0.29 | 0.99 |

CIQ | Q3 | 24 | 254 | 675.34 | 2.80 | 11.25 | 2.43 | 0.49 | 1.00 |

CIQ | Q2 | 24 | 264 | 796.64 | 2.50 | 10.60 | 2.15 | 0.55 | 1.00 |

CIQ | Q1 | 24 | 279 | 882.81 | 2.45 | 11.76 | 2.05 | 0.56 | 1.00 |

Figure 4.1 depicts ICC results for the selected set of IQ dimensions (completeness, accuracy, representation, and objectivity) for different participants’ groups (Q1, Q2, Q3, and Q4).

Figure 4.1: Interclass correlation vs. performance of players. The y-axis represents the ICC, while the x-axis portrays four groups of participants. The groups are divided into quartiles according to the points that participants scored when performing the gamified process. The first column AKR-11 contains results of a similar IQ study, in which Arazy and Kopak (2011) studied the measurability of IQ on the same set of IQ dimensions used in our study. The four highlighted columns (rightmost) exhibit the results of our study, where each column represents one group of players.

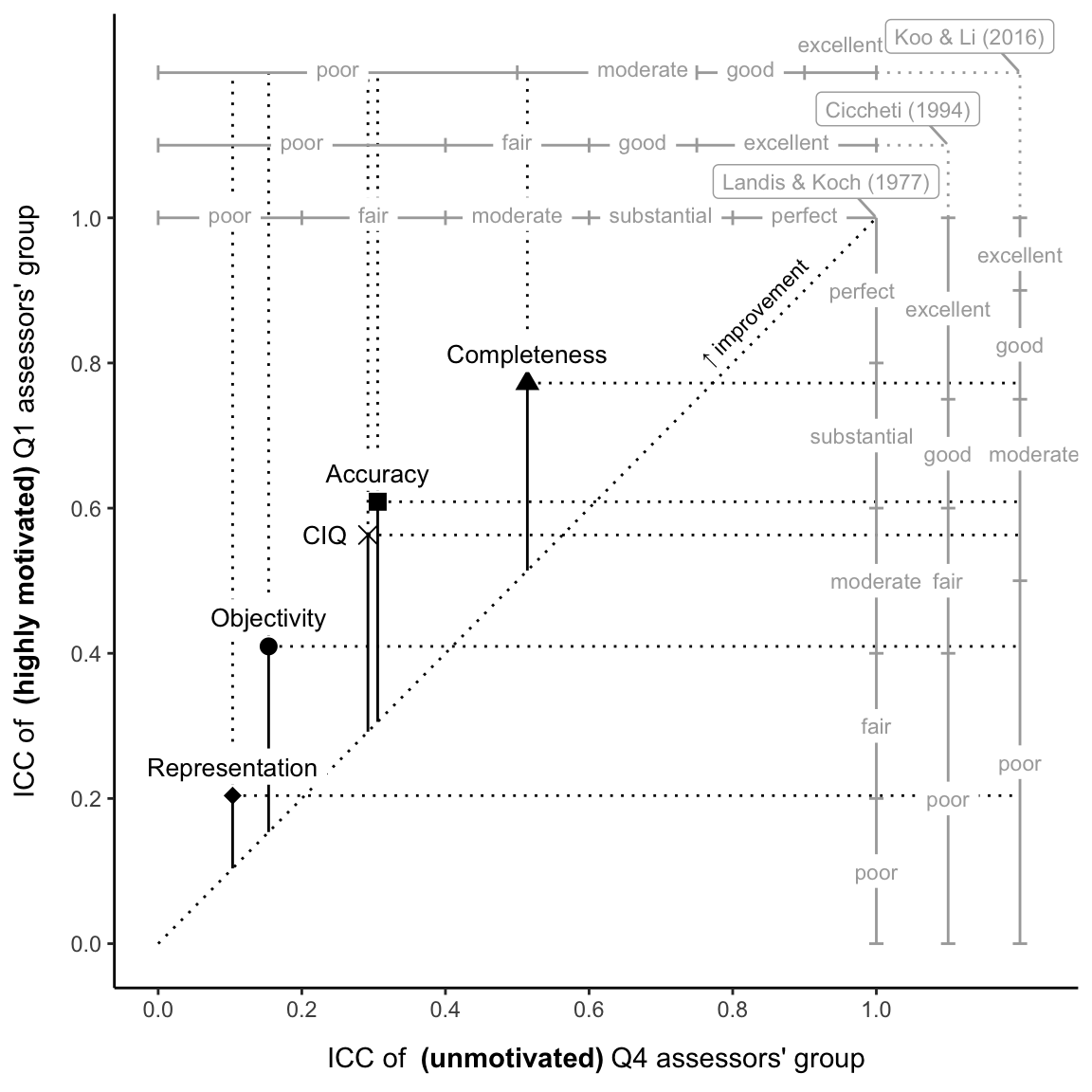

There are multiple guidelines for the interpretation of ICC inter-rater agreement values (Landis and Koch 1977; Cicchetti 1994; Koo and Li 2016). Figure 4.2 summarizes the results of our study by incorporating all aforementioned ICC interpretations. We can observe that highly motivated participants achieved substantially better results compared to the unmotivated ones regardless of the interpretation chosen.

Figure 4.2: Comparing inter-rater agreement for IQ dimensions in terms of motivation by assessors’ groups. The dual-scale data chart depicts the relationship between ICC values and various interpretations of inter-rater agreement. It compares the four IQ dimensions in terms of the extent to which motivation affected the increase in inter-rater agreement. The bottom x-axis denotes ICC for unmotivated users (Q4), while the left y-axis represents ICC values for highly motivated users (Q1). The scales on top of the x-axis and right of the y-axis denote various ICC interpretations for unmotivated (Q4) and motivated (Q1) users, respectively. The figure depicts all IQ dimensions and the mean value of the aforementioned dimensions. ICC values above the identity line (i.e. the dotted diagonal) represent an increase in ICC, while values below represent a decrease in ICC, when comparing unmotivated (Q4) and motivated (Q1) groups.

4.2 Discussion

When analyzing the differences in ICC between the various groups, we can observe that the agreement level increases with players’ performance in terms of the final score achieved (see Figure 4.1). Raters who were more motivated more carefully rated the hints by a given dimension, which lead to more homogenous assessments. Group Q4 with members that attained mediocre results in the gamified process, represents participants who had the poorest motivation and did not focus on the task-at-hand. The results for this group represent a foundation, a basic ICC with which to compare other group’ who were more motivated.

When comparing the other three quartiles (Q3, Q2, and Q1), it is evident that inter-rater agreement for all dimensions increases consistently with the increasing game score. We can observe the same for the average score, CIQ. The exception to the rule is the dimension representation; Q1 achieved a slightly lower inter-rater reliability than Q2. However, the ICC for representation is still higher than for Q4 and Q3.

There is a substantial increase in ICC between groups Q4 and Q3 for all dimensions. The results indicate that participants who were even slightly motivated quickly achieved better ICC. Therefore, for RQ1, we can conclude that increased motivation reinforces the measurability of IQ for short hints in the context of a gamified environment.

Based on detailed ICC results for the hands-on task (see Table 4.1), we can argue in response to RQ2 that ICC has a positive correlation with motivation. The overall results for \(\alpha\) reflect a high rate of scale reliability. As shown in Table 4.1, we reached the highest \(\alpha\) for dimension completeness in all four quartile groups (Q4 – Q1), which is also in line with the best results for ICC for that dimension.

In terms of ICC, participants attained the highest agreement levels for the dimension completeness, followed by accuracy, objectivity, and representation. For all four IQ dimensions, we obtained better results than existing studies (Arazy, Kopak, and Hadar 2017; Fidler and Lavbič 2017). However, we must emphasize that our study is not a replication of studies from the literature. This study focuses on other sources under investigation (hints) and other aspects (motivation) that may influence inter-rater reliability.

We observe from our results that raters can consistently identify the quality of a hint if it leads to a problem solution; hence, participants could successfully rate completeness. It is also evident that raters can identify missing information and thus deduce completeness. The latter result is in line with the results of Arazy, Kopak, and Hadar (2017), who reported that the ICC for completeness was substantially higher than for other dimensions, although inter-rater agreement on this dimension was substantially lower in their research. Higher ICC may be obtained for completeness since people have a better understanding of this dimension than the other three dimensions. Participants determined the quality of a hint based on the possible hiding places left when they considered the hint. Since the task was straightforward, the participants succeeded in evaluating the quality of these hints and achieved better inter-rater agreement.

In terms of accuracy, we achieved a moderate (0.61) agreement. Compared to completeness (0.77), the lower result for accuracy may indicate that weight estimation is more challenging than locating an object. However, of all quality dimensions, accuracy gained the biggest increase in inter-rater reliability with a slightly increased motivation (Q4 and Q3).

The measured ICC for the objectivity of motivated participants (Q1) is 0.41, noticeably lower than ICC for completeness (0.77) and accuracy (0.61). However, the result still demonstrates fair agreement. For non-motivated players (Q4), the ICC for objectivity was half that for accuracy and ICC noticeably increased with increased motivation. It is worth noting that the ICC trend for objectivity is very similar to that observed for completeness and accuracy. Not all participants recognized the relevant messages in the hints, and thus did not identify the quality of hints. The meaning of hints remained unclear because of the inclusion of scientific terms, which offered assessors little clarification. As a result, inter-rater reliability was below expectations, though an upward trend was still present.

Participants attained the lowest agreement levels of all four dimensions for the representation dimension. Motivation had a positive impact on representation ICC, but not as much as in the case of other dimensions. Participants struggled with determining the consistency and hence the quality of the hint. As a direct consequence, inter-rater reliability was very low. We believe that this task was the most cognitively challenging of the four, and most participants chose not to invest much effort in solving it.

Based on Landis and Koch (1977), Cicchetti (1994) and Koo and Li (2016) interpretations of ICC, we summarize the results of the study in Figure 4.2. Focusing on the groups of unmotivated participants (Q4) and the most motivated participants (Q1), the agreement levels were substantially higher for the motivated participants. Motivation increased the CIQ construct by 0.27 with the following interpretations (from Q4 – to Q1): fair – moderate (Landis and Koch 1977), poor – fair (Cicchetti 1994), and poor – moderate (Koo and Li 2016). Comparing our results to findings in the literature (Fidler and Lavbič 2017; Arazy, Kopak, and Hadar 2017) further confirms that this study has achieved results with much greater ICC.

We found an increase in an agreement between the two groups of participants on all four IQ dimensions. We achieved the highest ICC agreement improvement for completeness, which increased from moderate (0.51) to good (0.77), according to the interpretation of Koo and Li (2016). The increase in ICC for completeness (0.26) was slightly below average CIQ (0.27), which still resulted in a superior result in an agreement for completeness. Interestingly, we observed the biggest improvement in ICC for the dimension of accuracy, with ICC increasing by as much as 0.30, improving from poor (0.31) to moderate (0.61) agreement, according to the interpretation of Koo and Li (2016). Motivation proved to be the key factor contributing to better accuracy assessment. The level of agreement of non-motivated participants for the dimensions objectivity (0.15) and representation (0.10) was poor. The introduction of motivation did not improve the quality of assessment enough to reach a moderate agreement for both dimensions (0.41 and 0.20 respectively). However, objectivity yielded an ICC increase slightly below average (0.26), indicating that motivation is a driving factor for this dimension. Nonetheless, objectivity remains difficult to evaluate consistently, even with motivated assessors. For representation, we observed the lowest agreement and ICC increase (0.10) between the four dimensions. Non-motivated participants achieved poor agreement levels (0.10). Motivation contributed to the rise in inter-rater agreement, but the result remained in the zone of poor agreement (0.20) according to the interpretation of Koo and Li (2016).

4.3 Implications for research and practice

Our study supports the theoretical underpinning of IQ studies and confirms previous findings that IQ is a multidimensional construct that is difficult to measure. We also confirm that inter-rater agreement for different IQ dimensions can vary significantly.

Second, existing research has performed very poorly in assessing the measurability of IQ. According to most ICC interpretations, such results have very low measurability, so their interpretation is questionable. Our study builds on previous IQ-related research and extends it to alternative settings to demonstrate the significance of motivation in IQ assessment. Using gamified tasks to motivate assessors we were able to significantly improve the measurability of IQ (ICC). The correlation between points awarded in the gamified process and the inter-rater reliability agreement was positive for all four IQ dimensions and composite IQ (CIQ). The level of agreement achieved with the most motivated group of participants (Q1) was superior in comparison with results from related work. Future IQ measurement studies should take these results into account if they want interpretable results.

Third, the study extends previous research by introducing gamification to IQ assessment domain. It attempts to avoid rhetorical gamification by creating a renewed assessment process. The evidence reveals that gamification produced increased assessors’ motivation leading to a better inter-rater agreement, consequently improving IQ assessment. That also confirms previous findings from (Kasurinen and Knutas 2018; Treiblmaier and Putz 2020) stating that the gamification domain is immense and that researchers discover new application ares continuously.

Finally, the study attempts to motivate other researchers to replicate it in alternative settings to validate or complement our findings. Further studies should investigate additional factors that influence inter-rater reliability, such as heuristic principles used by participants, different sources of information, the size of the source under investigation, and what attributes of assessors affect results.

Information workers and researchers can benefit from our findings by creating IQ assessments in ways that take advantage of increased motivation. This study shows that we can achieve increased motivation by employing the concept of gamification, by including elements such as points, badges, and leaderboards.

Researchers studying the assessment of IQ should recognize motivation as a vital cue affecting IQ assessment. If applicable, they should consider including gamification in their studies. We conducted this study also with student participants. Our results demonstrate that gamification can be used successfully with students. Teachers creating gamified IQ tasks should consider improving the assessment process instead of adding gamification features to look like a gamified process.

Finally, the research provides insight into IQ and its dimensions for consumers of short online news. Many users are not aware of IQ dimensions and might start to consume contents in a more educated way.

4.4 Study validity

We performed activities both in the design phase and later in the data collection and analysis phases intended to increase the validity of our research.

To support internal validity, all participants involved in the experiment participated in the same gamified process, with equivalent study materials, questions, and the same method of obtaining data. The tool used in the experiment was intuitive and easy to use, so no special pre-test training was required for participation, although we performed an initial introduction to IQ dimensions to ensure participants understood the metrics being measured, as outlined in section 3.2. To minimize the instrumentation threat, we captured measured variables automatically and accurately. The participants were not aware of the research goal; they simply aimed to achieve the highest score within the gamified environment.

External validity requirements were addressed properly; our experimental setup represents a real-world situation and our test population has all knowledge expected of the general population. To maximize external validity, we followed the requirements outlined by Carver et al. (2010). As far as a generalization is concerned, the findings in Mullinix et al. (2015) reveal a considerable similarity between many treatment effects obtained from the convenience and nationally representative population-based samples.

Concerning construct validity, participants were not subject to any pressure, and participation in the study was voluntary, which minimized mortality threat. In order to avoid unintentionally influencing the participants’ behavior, there was no interaction between researchers and participants during the experiment or the study’s goals. The problem domains in the gamified environment were selected to minimize any bias introduced by the familiarity of participants with given domains, which could have skewed the results in favor of some participants.

In terms of conclusion validity, we employed a robust measurement of inter-rater reliability agreement, ICC, to derive statistically correct conclusions based on the collected data. To compare the results with the findings in the literature, we included several ICC interpretations. We argue that the number of participants and the data collected were sufficient to draw reliable conclusions. We provide an explanation that a rater’s motivation affects the measurability of IQ.

4.5 Limitations

Nevertheless, our study has some limitations that should be acknowledged. We wanted to include as large and as heterogeneous group of participants as possible, so we made the study available to the widest possible audience. Because our study did not have within a lab setup, we could not control all aspects of IQ assessment. For example, we were not able to assure that the participant completed the entire survey alone without assistance. However, by following the requirements outlined by Carver et al. (2010) and iteratively improving the study in the study design phase, we believe our findings are relevant. As discussed in section 3.2 we also addressed this issue by preprocessing and cleaning of obtained data.

One limitation of this study is that people of the same age groups are not fully equally represented with a median value of 20 years old as presented in section 3.2. Research was available to the widest possible audience, so we had limited influence on the age of the participants. In would thus prove insightful to replicate the study where all age groups are equally represented. Although we found no statistically significant differences, it has been found in the literature that some demographic factors may affect the perceived benefits of gamification (Koivisto and Hamari 2014).

We should also be aware of the limitations that come from the ability of the participant to assess the quality of the object according to information quality (Arazy and Kopak 2011). Information quality assessment proved to be difficult. In their study, (Arazy, Kopak, and Hadar 2017) showed that achieving agreement among assessors can be challenging.

For hints we used paragraph size documents instead of full size text documents. Fidler and Lavbič (2017) found that shortening the text from full-size text to paragraph-size text does not affect the agreemenet level of information quality evaluations. However, future studies should thus consider using full-size text hints, which might lead to better user experience despite retaining IQ perception.

Finally, only one problem domain has been used in our study, as presented in section 3.1. Creating gamified content for additional domains requires lots of effort, especially defining game levels for evaluating specific IQ dimensions. Hence, our study should also be applied to other problem domains in future study replications.

5 Conclusion

Reaching consensus on IQ assessment is challenging, and the factors that drive successful estimation of IQ have not been fully explored. This study extends related work and confirms the effect of motivation as a driving factor for improved IQ assessment. It concludes that the employment of innovative gamified IQ assessment was effective, particularly for IQ dimensions that proved to be more reliable to consistent judgment in the literature. It increased participant engagement through the assessment content shortening and the inclusion of gamification features like points, levels, progress bars, and leader-board.

The level of agreement achieved with the most motivated group of participants (Q1) was superior in comparison with results from related work. Concerning the inter-rater agreement across the four IQ dimensions, we demonstrate that the relationship between individual IQ dimensions varies with motivation. With increasing motivation, the inter-rater agreement consistently improved for the dimensions of objectivity, completeness, and accuracy. For the representation dimension, inter-rater reliability improved in the initial three quartiles.

Overall, gamification proved to be very useful in the field of IQ assessment. Thus, we strongly recommend that further IQ assessment studies control for the influence of motivation, and consider including a gamification approach. With the investigation of a different IQ source, foreknowledge might also be a key factor. Further studies could investigate the association between the amount of foreknowledge and inter-rater reliability.